Knowledge

- Identify logs used to troubleshoot storage issues

- Describe the attributes of the VMFS-5 file system

Skills and Abilities

- Use esxcli to troubleshoot multipathing and PSA-related issues

- Use esxcli to troubleshoot VMkernel storage module configurations

- Use esxcli to troubleshoot iSCSI related issues

- Troubleshoot NFS mounting and permission issues

- Use esxtop/resxtop and vscsiStats to identify storage performance issues

- Configure and troubleshoot VMFS datastores using vmkfstools

- Troubleshoot snapshot and re-signaturing issues

- Analyze log files to identify storage and multipathing problems

Use esxcli to troubleshoot multipathing and PSA-related issues

Official Documentation:

vSphere Command-Line Interface Concepts and Examples, Chapter 4 “Managing Storage”, section “Managing Paths”, page 42.

Multipathing, PSA and the related commands have been discussed in Objective 1.3 “Configure and manage complex multipathing and PSA plugins”.

More information:

TheFoglite blog: Use esxcli to troubleshoot storage

Some commands that are very usefull.

| Operation | Command | Information |

| List the SCSI stats for the SCSI Paths in the system for FC/iSCSI LUNs | esxcli storage core path stats get | Displays the quantity of read and write operations and failures |

| Lists all the SCSI paths for FC/iSCSI LUNs | esxcli storage core path list | Displays each path – Includes the CTL, Adapter, Device, transport mode, state, and plugin type/details |

| Only get path information for a single device | esxcli storage core path list -d deviceID | Displays path information for specified device – Includes the CTL, Adapter, Device, transport mode, state, and plugin type/details |

| List the adapters in the system | esxcli storage core adapter list | Displays the HBA, driver, state, UID, and Description of each installed adapter |

| Turn off a path | esxcli storage core path set –state off –path vmhba??:C?:T?:L? | N/A |

| Activate a path | esxcli storage core path set –state active –path vmhba??:C?:T?:L? | N/A |

| List devices claimed by Native Multipath Plugin | esxcli storage nmp device list | Displays Device, Array Type/Config, and Path Selection Policy information |

| List the available Storage Array Type Plugins | esxcli storage nmp satp list | Displays Name, Default Path Selection Policy and Description for each SATP |

| Lists the available Path Selection Policies | esxcli storage nmp psp list | Displays the name and description of all path selection policies |

| Lists the paths claimed by the NMP and shows the SATP & PSP information associated with the path |

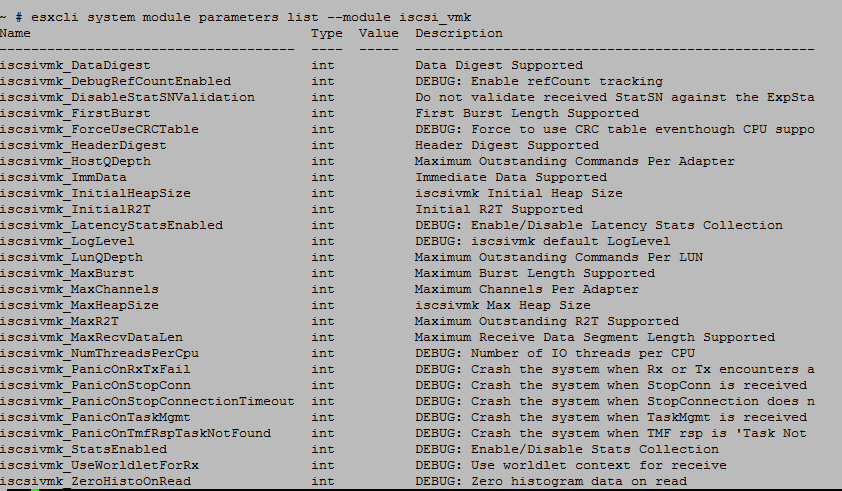

Use esxcli to troubleshoot VMkernel storage module configurations

Official Documentation:

vSphere Storage Guide, Chapter 16 “VMKernel and Storage”, page 149.

More information:

TheFoglite blog: Use esxcli to troubleshoot storage

Below you will find a basic list of commands for managing VMkernel modules. Examples of modifying advanced configuration options for these modules can be found on this knowledgebase page.

Get a listing of the installed VMkernel modules

esxcli system module list

esxcli system module list | grep iscsi

Get a listing of advanced parameters for a given module

esxcli system module parameters list –module ModuleName

iscsi_vmk Module

Use esxcli to troubleshoot iSCSI related issues

Official Documentation:

vSphere Command-Line Interface Concepts and Examples, Chapter 5 “Managing iSCSI Storage”, page 53.

ESXi systems include iSCSI technology to access remote storage using an IP network. You can use the vSphere Client, commands in the esxcli iscsi namespace, or the vicfg-iscsi command to configure both hardware and software iSCSI storage for your ESXi system.

More information:

TheFoglite blog: Use esxcli to troubleshoot storage

Use esxcli to troubleshoot iSCSI related issues

| Operation | Command | Information |

| List the iSCSI HBAs | esxcli iscsi adapter list | Displays the Adapter, Driver, State, UID, and description of all iSCSI HBAs in the system (software or hardware) |

| List the settings for iSCSI HBAs | esxcli iscsi adapter get -A AdapterName | Display the iSCSI information for an iSCSI HBA (software or hardware) |

| List Network Portal for iSCSI Adapter (iSCSI vmknic) | esxcli iscsi networkportal list | Display iscsi vmhbas and network information |

| List physical network portals for iSCSI (vmnic) | esxcli iscsi physicalnetworkportal list | Displays uplinks that are available for use with iSCSI adapters |

| List iSCSI Sessions | esxcli iscsi session list | Displays information about each iSCSI session |

| Check if software iSCSI is enabled/disabled | esxcli iscsi software get | Displays true if software iSCSI is enabled |

| List logical vmknic information | esxcli iscsi logicalnetworkportal | Displays Adapter, vmknic, MAC and MAC compliance/validity |

| Get information about iBFT boot details (Software initiated iSCSI boot from disk) | esxcli iscsi ibftboot get | Displays iBFT details |

| List iSCSI IMA plugins | esxcli iscsi plugin list | Displays Name, Vendor, version details and build for installed IMA (iSCSI Management API) plugins |

Troubleshoot NFS mounting and permission issues

Official Documentation:

vSphere Command-Line Interface Concepts and Examples, Chapter 4 “Managing Storage”, section “Managing NFS/NAS Datastores”, page 48.

Recommended reading on this objective is VMware KB “Troubleshooting connectivity issues to an NFS datastore on ESX/ESXi hosts“.

Validate that each troubleshooting step below is true for your environment. The steps provide instructions or a link to a document, for validating the step and taking corrective action as necessary. The steps are ordered in the most appropriate sequence to isolate the issue and identify the proper resolution. Do not skip a step.

-

Check the MTU size configuration on the port group which is designated as the NFS VMkernel port group. If it is set to anything other than 1500 or 9000, test the connectivity using the vmkping command:

# vmkping -I vmkN -s nnnn xxx.xxx.xxx.xxx

Where:- vmkN is vmk0, vmk1, etc, depending on which vmknic is assigned to NFS.

Note: The -I option to select the vmkernel interface is available only in ESXi 5.1. Without this option in 4.x/5.0, the host will use the vmkernel associated with the destination network being pinged in the host routing table. The host routing table can be viewed using the esxcfg-route -l command. - nnnn is the MTU size minus 28 bytes. For example, for an MTU size of 9000, use 8972. 20 bytes are used for ICMP, and 8 bytes are used for IP Header Overhead.

- xxx.xxx.xxx.xxx is the IP address of the target NFS storage.

To reveal the vmknics, run the command:

esxcfg-vmknic -l

Check the output for the vmk_ interface associated with NFS.

- vmkN is vmk0, vmk1, etc, depending on which vmknic is assigned to NFS.

- Verify connectivity to the NFS server and ensure that it is accessible through the firewalls. For more information, see Cannot connect to NFS network share (1007352).

-

Use netcat (nc) to see if you can reach the NFS server nfsd TCP/UDP port (default 2049) on the storage array from the host:

# nc -z array-IP 2049

Example output:

Connection to 10.1.10.100 2049 port [tcp/http] succeeded!

Note: The netcat command is available with ESX 4.x and ESXi 4.1 and later.

- Verify that the ESX host can vmkping the NFS server. For more information, see Testing VMkernel network connectivity with the vmkping command (1003728).

- Verify that the NFS host can ping the VMkernel IP of the ESX host.

- Verify that the virtual switch being used for storage is configured correctly. For more information, see the Networking Attached Storage section of the ESX Configuration Guide.

Note: Ensure that there are enough available ports on the virtual switch. For more information, see Network cable of a virtual machine appears unplugged (1004883) and No network connectivity if all ports are in use (1009103). - Verify that the storage array is listed in the Hardware Compatibility Guide. For more information, see the VMware Compatibility Guide. Consult your hardware vendor to ensure that the array is configured properly.

Note: Some array vendors have a minimum microcode/firmware version that is required to work with ESX. - Verify that the physical hardware functions correctly. Consult your hardware vendor for more details.

- If this is a Windows server, verify that it is correctly configured for NFS. For more information, see Troubleshooting the failed process of adding a datastore from a Windows Services NFS device (1004490).

To troubleshoot a mount being read-only:

- Verify that the permissions of the NFS server have not been set to read-only for this ESX host.

- Verify that the NFS share was not mounted with the read-only box selected.

- For troubleshooting NFS by enabling the /NFS/LogNfsStat3 advanced parameters, see Using nfsstat3 to troubleshoot NFS error: Failed to get object: No connection (2010132).

If the above troubleshooting has not resolved the issue and there are still locked files. Attempting to unmount the NAS volume may fail with an error similar to:

WARNING: NFS: 1797: 8564d0cc-58f6-4573-886f-693fa721098c has open files, cannot be unmounted

To troubleshoot the lock:

- Identify the ESX/ESXi host holding the lock. For more information, see Investigating virtual machine file locks on ESXi/ESX (10051).

- Restart the management agents on the host. For more information, see Restarting the Management agents on an ESXi or ESX host (1003490).

- If the lock remains, a host reboot is required to break the lock.

Note: If you wish to investigate the cause of the locking issue further, ensure to capture the host logs before rebooting.

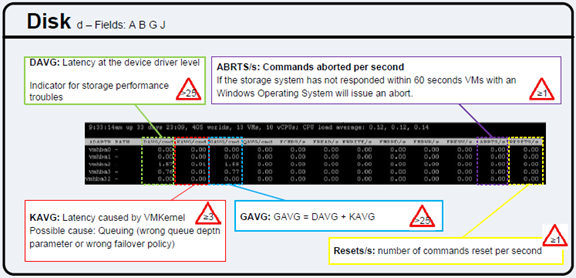

Use esxtop/resxtop and vscsiStats to identify storage performance issues

Official Documentation:

It’s not an official document, but very usefull. vSphere 5 ESXTOP quick Overview for Troubleshooting http://www.vmworld.net/wp-content/uploads/2012/05/Esxtop_Troubleshooting_eng.pdf

See the Disk section.

DAVG: Latency at the device driver level, indicator for storage performance troubles

ARBTS/s: Commands aborted per second, if storage system has not responded within 60 seconds VMs with an Windows OS will issue an abort

KAVG: Latency caused by VMKernel, Possible cause: Queuing (wrong queue depth parameter or wrong failover policy)

GAVG: GAVG=DAVG + KAVG. latency as it appears to the guest VM

Resets/s: number of commands reset per second

More information:

See Duncan Epping blog Yellow Bricks about ESXTOP: http://www.yellow-bricks.com/esxtop/

Configure and troubleshoot VMFS datastores using vmkfstools

Official Documentation:

vSphere Storage Guide, Chapter 22,”Using vmkfstools”, page 205.

vmkfstools is one of the ESXi Shell commands for managing VMFS volumes and virtual disks. You can perform many storage operations using the vmkfstools command.

vmkfstools Command Syntax

Generally, you do not need to log in as the root user to run the vmkfstools commands. However, some commands, such as the file system commands, might require the root user login.

The vmkfstools command supports the following command syntax:

vmkfstools conn_options options target.

Target specifies a partition, device, or path to apply the command option to.

| Argument | Description |

| Options | One or more command-line options and associated arguments that you use tospecify the activity for vmkfstools to perform, for example, choosing the diskformat when creating a new virtual disk.After entering the option, specify a target on which to perform the operation.Target can indicate a partition, device, or path. |

| Partition | Specifies disk partitions. This argument uses a disk_ID:P format, where disk_ID isthe device ID returned by the storage array and P is an integer that represents thepartition number. The partition digit must be greater than zero (0) and shouldcorrespond to a valid VMFS partition. |

| Device | Specifies devices or logical volumes. This argument uses a path name in theESXi device file system. The path name begins with /vmfs/devices, which is themount point of the device file system.Use the following formats when you specify different types of devices:

|

| path | Specifies a VMFS file system or file. This argument is an absolute or relative paththat names a directory symbolic link, a raw device mapping, or a fileunder /vmfs.

|

vmkfstools Options

The vmkfstools command has several options. Some of the options are suggested for advanced users only.

The long and single-letter forms of the options are equivalent. For example, the following commands areidentical.

vmkfstools –createfs vmfs5 –blocksize 1m disk_ID:P

vmkfstools -C vmfs5 -b 1m disk_ID:P

-v Suboption

The -v suboption indicates the verbosity level of the command output.

The format for this suboption is as follows:

-v –verbose number

You specify the number value as an integer from 1 through 10.

You can specify the -v suboption with any vmkfstools option. If the output of the option is not suitable for use with the -v suboption, vmkfstools ignores -v.

NOTE Because you can include the -v suboption in any vmkfstools command line, -v is not included as a suboption in the option descriptions.

File System Options

File system options allow you to create a VMFS file system. These options do not apply to NFS. You can perform many of these tasks through the vSphere Client.

Listing Attributes of a VMFS Volume

Use the vmkfstools command to list attributes of a VMFS volume.

-P –queryfs

-h –human-readable

When you use this option on any file or directory that resides on a VMFS volume, the option lists the attributes of the specified volume. The listed attributes include the file system label, if any, the number of extents comprising the specified VMFS volume, the UUID, and a listing of the device names where each extent resides.

NOTE If any device backing VMFS file system goes offline, the number of extents and available space change accordingly.

You can specify the -h suboption with the -P option. If you do so, vmkfstools lists the capacity of the volume in a more readable form, for example, 5k, 12.1M, or 2.1G.

Creating a VMFS File System

Use the vmkfstools command to create a VMFS datastore.

-C –createfs [vmfs3|vmfs5]

-b –blocksize block_size kK|mM

-S –setfsname datastore

This option creates a VMFS3 or VMFS5 datastore on the specified SCSI partition, such as disk_ID:P. The partition becomes the file system’s head partition.

NOTE Use the VMFS3 option when you need legacy hosts to access the datastore.

Extending an Existing VMFS Volume

Use the vmkfstools command to add an extent to a VMFS volume.

-Z –spanfs span_partition head_partition

This option extends the VMFS file system with the specified head partition by spanning it across the partition specified by span_partition. You must specify the full path name, for example /vmfs/devices/disks/disk_ID: 1. Each time you use this option, you extend a VMFS volume with a new extent so that the volume spans multiple partitions.

CAUTION When you run this option, you lose all data that previously existed on the SCSI device you specified in span_partition .

Growing an Existing Extent

Instead of adding a new extent to a VMFS datastore, you can grow an existing extent using the vmkfstools -G command.

Use the following option to increase the size of a VMFS datastore after the underlying storage had its capacity increased.

-G –growfs device device

This option grows an existing VMFS datastore or its extent. For example, vmkfstools –growfs /vmfs/devices/disks/disk_ID:1 /vmfs/devices/disks/disk_ID:1

Upgrading a VMFS Datastore

You can upgrade a VMFS3 to VMFS5 datastore.

CAUTION The upgrade is a one-way process. After you have converted a VMFS3 datastore to VMFS5, you cannot revert it back.

When upgrading the datastore, use the following command: vmkfstools -T /vmfs/volumes/UUID

NOTE All hosts accessing the datastore must support VMFS5 . If any ESX/ESXi host version 4.x or earlier is using the VMFS3 datastore, the upgrade fails and the host’s mac address is displayed. with the Mac address details of the Host which is actively using the Datastore

Virtual Disk Options

Virtual disk options allow you to set up, migrate, and manage virtual disks stored in VMFS and NFS file systems. You can also perform most of these tasks through the vSphere Client.

Supported Disk Formats When you create or clone a virtual disk, you can use the -d –diskformat suboption to specify the format for the disk.

Choose from the following formats:

- zeroedthick (default) – Space required for the virtual disk is allocated during creation. Any data remaining on the physical device is not erased during creation, but is zeroed out on demand at a later time on first

- write from the virtual machine. The virtual machine does not read stale data from disk.

- eagerzeroedthick – Space required for the virtual disk is allocated at creation time. In contrast to zeroedthick format, the data remaining on the physical device is zeroed out during creation. It might take much longer to create disks in this format than to create other types of disks.

- thin – Thin-provisioned virtual disk. Unlike with the thick format, space required for the virtual disk is not allocated during creation, but is supplied, zeroed out, on demand at a later time.

- rdm:device – Virtual compatibility mode raw disk mapping.

- rdmp:device – Physical compatibility mode (pass-through) raw disk mapping.

- 2gbsparse – A sparse disk with 2GB maximum extent size. You can use disks in this format with hosted VMware products, such as VMware Fusion, Player, Server, or Workstation. However, you cannot power on sparse disk on an ESXi host unless you first re-import the disk with vmkfstools in a compatible format, such as thick or thin.

NFS Disk Formats

The only disk formats you can use for NFS are thin, thick, zeroedthick and 2gbsparse.

Thick, zeroedthick and thin formats usually behave the same because the NFS server and not the ESXi host determines the allocation policy. The default allocation policy on most NFS servers is thin. However, on NFS servers that support Storage APIs – Array Integration, you can create virtual disks in zeroedthick format. The reserve space operation enables NFS servers to allocate and guarantee space.

Creating a Virtual Disk

Use the vmkfstools command to create a virtual disk.

-c –createvirtualdisk size[kK|mM|gG]

-a –adaptertype [buslogic|lsilogic|ide] srcfile

-d –diskformat [thin|zeroedthick|eagerzeroedthick]

This option creates a virtual disk at the specified path on a datastore. Specify the size of the virtual disk. When you enter the value for size, you can indicate the unit type by adding a suffix of k (kilobytes), m (megabytes), or g (gigabytes). The unit type is not case sensitive. vmkfstools interprets either k or K to mean kilobytes. If you don’t specify a unit type, vmkfstools defaults to bytes.

You can specify the following suboptions with the -c option.

- n -a specifies the device driver that is used to communicate with the virtual disks. You can choose between BusLogic, LSI Logic, or IDE drivers.

- n -d specifies disk formats.

Initializing a Virtual Disk

Use the vmkfstools command to initialize a virtual disk.

-w –writezeros

This option cleans the virtual disk by writing zeros over all its data. Depending on the size of your virtual disk and the I/O bandwidth to the device hosting the virtual disk, completing this command might take a long time.

CAUTION When you use this command, you lose any existing data on the virtual disk.

Inflating a Thin Virtual Disk

Use the vmkfstools command to inflate a thin virtual disk.

-j –inflatedisk

This option converts a thin virtual disk to eagerzeroedthick, preserving all existing data. The option allocates and zeroes out any blocks that are not already allocated.

Removing Zeroed Blocks

Use the vmkfstools command to convert any thin, zeroedthick, or eagerzeroedthick virtual disk to a thin disk with zeroed blocks removed.

-K –punchzero

This option deallocates all zeroed out blocks and leaves only those blocks that were allocated previously and contain valid data. The resulting virtual disk is in thin format.

Converting a Zeroedthick Virtual Disk to an Eagerzeroedthick Disk

Use the vmkfstools command to convert any zeroedthick virtual disk to an eagerzeroedthick disk.

-k –eagerzero

While performing the conversion, this option preserves any data on the virtual disk.

Deleting a Virtual Disk

This option deletes a virtual disk file at the specified path on the VMFS volume.

-U –deletevirtualdisk

Renaming a Virtual Disk

This option renames a virtual disk file at the specified path on the VMFS volume.

You must specify the original file name or file path oldName and the new file name or file path newName.

-E –renamevirtualdisk oldName newName

Cloning a Virtual Disk or RDM

This option creates a copy of a virtual disk or raw disk you specify.

-i –clonevirtualdisk srcfile -d –diskformat [rdm:device|rdmp:device|thin|2gbsparse]

You can use the -d suboption for the -i option. This suboption specifies the disk format for the copy you create.

A non-root user is not allowed to clone a virtual disk or an RDM.

Extending a Virtual Disk

This option extends the size of a disk allocated to a virtual machine after the virtual machine has been created.

-X –extendvirtualdisk newSize [kK|mM|gG]

You must power off the virtual machine that uses this disk file before you enter this command. You might have to update the file system on the disk so the guest operating system can recognize and use the new size of the disk and take advantage of the extra space.

You specify the newSize parameter in kilobytes, megabytes, or gigabytes by adding a k (kilobytes), m (megabytes), or g (gigabytes) suffix. The unit type is not case sensitive. vmkfstools interprets either k or K to mean kilobytes. If you don’t specify a unit type, vmkfstools defaults to kilobytes.

The newSize parameter defines the entire new size, not just the increment you add to the disk.

For example, to extend a 4g virtual disk by 1g, enter: vmkfstools -X 5g disk name.

You can extend the virtual disk to the eagerzeroedthick format by using the -d eagerzeroedthick option.

NOTE Do not extend the base disk of a virtual machine that has snapshots associated with it. If you do, you can no longer commit the snapshot or revert the base disk to its original size.

Upgrading Virtual Disks

This option converts the specified virtual disk file from ESX Server 2 format to the ESXi format.

-M –migratevirtualdisk

Creating a Virtual Compatibility Mode Raw Device Mapping

This option creates a Raw Device Mapping (RDM) file on a VMFS volume and maps a raw LUN to this file.

After this mapping is established, you can access the LUN as you would a normal VMFS virtual disk. The file length of the mapping is the same as the size of the raw LUN it points to.

-r –createrdm device

When specifying the device parameter, use the following format:

/vmfs/devices/disks/disk_ID:P

Creating a Physical Compatibility Mode Raw Device Mapping

This option lets you map a pass-through raw device to a file on a VMFS volume. This mapping lets a virtual machine bypass ESXi SCSI command filtering when accessing its virtual disk.This type of mapping is useful when the virtual machine needs to send proprietary SCSI commands, for example, when SAN-aware software runs on the virtual machine.

-z –createrdmpassthru device

After you establish this type of mapping, you can use it to access the raw disk just as you would any other VMFS virtual disk.

When specifying the device parameter, use the following format:

/vmfs/devices/disks/disk_ID

Listing Attributes of an RDM

This option lets you list the attributes of a raw disk mapping.

-q –queryrdm

This option prints the name of the raw disk RDM. The option also prints other identification information, like the disk ID, for the raw disk.

Displaying Virtual Disk Geometry

This option gets information about the geometry of a virtual disk.

-g –geometry

The output is in the form: Geometry information C/H/S, where C represents the number of cylinders, H represents the number of heads, and S represents the number of sectors.

NOTE When you import virtual disks from hosted VMware products to the ESXi host, you might see a disk geometry mismatch error message. A disk geometry mismatch might also be the cause of problems loading a guest operating system or running a newly-created virtual machine.

Checking and Repairing Virtual Disks

Use this option to check or repair a virtual disk in case of an unclean shutdown.

-x , –fix [check|repair]

Checking Disk Chain for Consistency

With this option, you can check the entire disk chain. You can determine if any of the links in the chain are corrupted or any invalid parent-child relationships exist.

-e –chainConsistent

Storage Device Options

Device options allows you to perform administrative task for physical storage devices.

Managing SCSI Reservations of LUNs

The -L option lets you reserve a SCSI LUN for exclusive use by the ESXi host, release a reservation so that other hosts can access the LUN, and reset a reservation, forcing all reservations from the target to be released.

-L –lock [reserve|release|lunreset|targetreset|busreset] device

CAUTION Using the -L option can interrupt the operations of other servers on a SAN. Use the -L option only when troubleshooting clustering setups.

Unless specifically advised by VMware, never use this option on a LUN hosting a VMFS volume.

You can specify the -L option in several ways:

- -L reserve – Reserves the specified LUN. After the reservation, only the server that reserved that LUN can access it. If other servers attempt to access that LUN, a reservation error results.

- -L release – Releases the reservation on the specified LUN. Other servers can access the LUN again.

- -L lunreset – Resets the specified LUN by clearing any reservation on the LUN and making the LUN available to all servers again. The reset does not affect any of the other LUNs on the device. If another LUN on the device is reserved, it remains reserved.

- -L targetreset – Resets the entire target. The reset clears any reservations on all the LUNs associated with that target and makes the LUNs available to all servers again.

- -L busreset – Resets all accessible targets on the bus. The reset clears any reservation on all the LUNs accessible through the bus and makes them available to all servers again.

When entering the device parameter, use the following format:

/vmfs/devices/disks/disk_ID:P

Breaking Device Locks

The -B option allows you to forcibly break the device lock on a particular partition.

-B –breaklock device

When entering the device parameter, use the following format:

/vmfs/devices/disks/disk_ID:P

You can use this command when a host fails in the middle of a datastore operation, such as grow extent, add extent, or resignaturing. When you issue this command, make sure that no other host is holding the lock.

More Information:

VMware KB 1009829 Manually creating a VMFS volume using vmkfstools -C

Troubleshoot snapshot and re-signaturing issues

Official Documentation:

TheFoglite blog: Troubleshoot snapshot and resignaturing issues

VMware KB1011387, vSphere handling of LUNs detected as snapshot LUN.

The Foglite blog describes the process the best. Original blog post below.

Each VMFS 5 datastore contains a Universally Unique Identifier (UUID). The UUID is stored in the metadata of your file system called a superblock and is a unique hexadecimal number generated by VMware.

When a duplicated (byte-for-byte) copy of a datastore or underlying LUN is executed, the resultant copy will contain the same UUID. As a VMware administrator, you have two options when bringing the duplicate datastore online:

- VMware prevents two data stores with the same UUID from mounting. You may unmount the initial datastore and bring the duplicate datastore with the same UUID online.

- Or, you may create a new UUID (aka resignature) for the datastore and then both disks may be brought online at the same time. A resignatured disk is no longer a duplicate of the Original.

Windows administrators can relate to this when they clone Windows systems. A new SID must be generated for any cloned machine. Otherwise, you will encountered duplicate SID errors on your network when you bring multiple machines with the same SID online at the same time.

Before you begin

- Creating a new signature for a drive is irreversible

- A datastore with extents may only be resignatured if all extents are online

- The VMs that use a datastore that was resignatured must be reassociated with the disk in their respective configuration files. The VMs must also be re-registered within vCenter.

Resignature a datastore using esxcli

- Acquire the list of copies: esxcli storage vmfs snapshot list

- esxcli storage vmfs snapshot resignature –volume-label=<label>|–volume-uuid=<id>

Resignature a datastore using vSphere Client

- Enter the Host view (Ctrl + Shift + H)

- Click Storage under the Hardware frame

- Click Add Storage in the right window frame

- Select Disk/LUN and click Next

- Select the device to add and click Next

- Select Assign new signature and click Next

- Review your changes and then click Finish

More information:

TheFoglite blog: VMFS 5 Resignaturing

VMware Blog: VMware Snapshots

Analyze log files to identify storage and multipathing problems

Official Documentation:

See previous objective 6.1, analysing logs.

ESXi 5.0 Host Log Files

Logs for an ESXi 5.0 host are grouped according to the source component:

- /var/log/auth.log: ESXi Shell authentication success and failure.

- /var/log/dhclient.log: DHCP client service, including discovery, address lease requests and renewals.

- /var/log/esxupdate.log: ESXi patch and update installation logs.

- /var/log/hostd.log: Host management service logs, including virtual machine and host Task and Events, communication with the vSphere Client and vCenter Server vpxa agent, and SDK connections.

- /var/log/shell.log: ESXi Shell usage logs, including enable/disable and every command entered. For more information, see the Managing vSphere with Command-Line Interfaces section of the vSphere 5 Command Line documentation and Auditing ESXi Shell logins and commands in ESXi 5.x (2004810).

- /var/log/sysboot.log: Early VMkernel startup and module loading.

- /var/log/boot.gz: A compressed file that contains boot log information and can be read using zcat /var/log/boot.gz|more.

- /var/log/syslog.log: Management service initialization, watchdogs, scheduled tasks and DCUI use.

- /var/log/usb.log: USB device arbitration events, such as discovery and pass-through to virtual machines.

- /var/log/vob.log: VMkernel Observation events, similar to vob.component.event.

- /var/log/vmkernel.log: Core VMkernel logs, including device discovery, storage and networking device and driver events, and virtual machine startup.

- /var/log/vmkwarning.log: A summary of Warning and Alert log messages excerpted from the VMkernel logs.

- /var/log/vmksummary.log: A summary of ESXi host startup and shutdown, and an hourly heartbeat with uptime, number of virtual machines running, and service resource consumption. For more information, see Format of the ESXi 5.0 vmksummary log file (2004566).

Note: For information on sending logs to another location (such as a datastore or remote syslog server), see Configuring syslog on ESXi 5.0 (2003322)

Logs from vCenter Server Components on ESXi 5.0

When an ESXi 5.0 host is managed by vCenter Server 5.0, two components are installed, each with its own logs:

- /var/log/vpxa.log: vCenter Server vpxa agent logs, including communication with vCenter Server and the Host Management hostd agent.

- /var/log/fdm.log: vSphere High Availability logs, produced by the fdm service. For more information, see the vSphere HA Security section of the vSphere 5.0 Availability Guide.

Note: If persistent scratch space is configured, many of these logs are located on the scratch volume and the /var/log/ directory contains symlinks to the persistent storage location. Rotated logs are compressed at the persistent location and/or at /var/run/log/. For more information, see Creating a persistent scratch location for ESXi (1033696).

Other exam notes

- The Saffageek VCAP5-DCA Objectives http://thesaffageek.co.uk/vcap5-dca-objectives/

- Paul Grevink The VCAP5-DCA diaries http://paulgrevink.wordpress.com/the-vcap5-dca-diaries/

- Edward Grigson VCAP5-DCA notes http://www.vexperienced.co.uk/vcap5-dca/

- Jason Langer VCAP5-DCA notes http://www.virtuallanger.com/vcap-dca-5/

- The Foglite VCAP5-DCA notes http://thefoglite.com/vcap-dca5-objective/

VMware vSphere official documentation

| VMware vSphere Basics Guide | html | epub | mobi | |

| vSphere Installation and Setup Guide | html | epub | mobi | |

| vSphere Upgrade Guide | html | epub | mobi | |

| vCenter Server and Host Management Guide | html | epub | mobi | |

| vSphere Virtual Machine Administration Guide | html | epub | mobi | |

| vSphere Host Profiles Guide | html | epub | mobi | |

| vSphere Networking Guide | html | epub | mobi | |

| vSphere Storage Guide | html | epub | mobi | |

| vSphere Security Guide | html | epub | mobi | |

| vSphere Resource Management Guide | html | epub | mobi | |

| vSphere Availability Guide | html | epub | mobi | |

| vSphere Monitoring and Performance Guide | html | epub | mobi | |

| vSphere Troubleshooting | html | epub | mobi | |

| VMware vSphere Examples and Scenarios Guide | html | epub | mobi |

Disclaimer.

The information in this article is provided “AS IS” with no warranties, and confers no rights. This article does not represent the thoughts, intentions, plans or strategies of my employer. It is solely my opinion.