Knowledge

- Identify VMware FT hardware requirements

- Identify VMware FT compatibility requirements

Skills and Abilities

- Modify VM and ESXi host settings to allow for FT compatibility

- Use VMware best practices to prepare a vSphere environment for FT

- Configure FT logging

- Prepare the infrastructure for FT compliance

-

Test FT failover, secondary restart, and application fault tolerance in a FT Virtual Machine

Modify VM and ESXi host settings to allow for FT compatibility

Official Documentation:

vSphere Availability Guide, Chapter 3,” Providing Fault Tolerance for Virtual Machines”, page 37.

You can enable vSphere Fault Tolerance for your virtual machines to ensure business continuity with higher levels of availability and data protection than is offered by vSphere HA.

Fault Tolerance is built on the ESXi host platform (using the VMware vLockstep technology), and it provides continuous availability by having identical virtual machines run in virtual lockstep on separate hosts.

To obtain the optimal results from Fault Tolerance you should be familiar with how it works, how to enable it for your cluster and virtual machines, and the best practices for its usage.

How Fault Tolerance Works

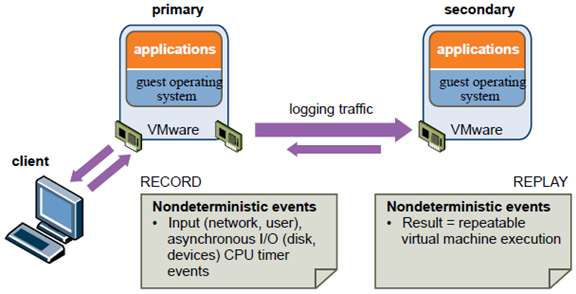

vSphere Fault Tolerance provides continuous availability for virtual machines by creating and maintaining a Secondary VM that is identical to, and continuously available to replace, the Primary VM in the event of a failover situation.

You can enable Fault Tolerance for most mission critical virtual machines. A duplicate virtual machine, called the Secondary VM, is created and runs in virtual lockstep with the Primary VM. VMware vLockstep captures inputs and events that occur on the Primary VM and sends them to the Secondary VM, which is running on another host. Using this information, the Secondary VM’s execution is identical to that of the Primary VM.

Because the Secondary VM is in virtual lockstep with the Primary VM, it can take over execution at any point without interruption, thereby providing fault tolerant protection.

NOTE FT logging traffic between Primary and Secondary VMs is unencrypted and contains guest network and storage I/O data, as well as the memory contents of the guest operating system. This traffic can include sensitive data such as passwords in plaintext. To avoid such data being divulged, ensure that this network is secured, especially to avoid ‘man-in-the-middle’ attacks. For example, you could use a private network for FT logging traffic.

The Primary and Secondary VMs continuously exchange heartbeats. This exchange allows the virtual machine pair to monitor the status of one another to ensure that Fault Tolerance is continually maintained. A transparent failover occurs if the host running the Primary VM fails, in which case the Secondary VM is immediately activated to replace the Primary VM. A new Secondary VM is started and Fault Tolerance redundancy is reestablished within a few seconds. If the host running the Secondary VM fails, it is also immediately replaced.

In either case, users experience no interruption in service and no loss of data.

A fault tolerant virtual machine and its secondary copy are not allowed to run on the same host. This restriction ensures that a host failure cannot result in the loss of both virtual machines. You can also use VM-Host affinity rules to dictate which hosts designated virtual machines can run on. If you use these rules, be aware that for any Primary VM that is affected by such a rule, its associated Secondary VM is also affected by that rule. For more information about affinity rules, see the vSphere Resource Management documentation.

Fault Tolerance avoids “split-brain” situations, which can lead to two active copies of a virtual machine after recovery from a failure. Atomic file locking on shared storage is used to coordinate failover so that only one side continues running as the Primary VM and a new Secondary VM is respawned automatically.

NOTE The anti-affinity check is performed when the Primary VM is powered on. It is possible that the Primary and Secondary VMs can be on the same host when they are both in a powered-off state. This is normal behavior and when the Primary VM is powered on, the Secondary VM is started on a different host at that time.

Fault Tolerance Checklist

The following checklist contains cluster, host, and virtual machine requirements that you need to be aware of before using vSphere Fault Tolerance.

Review this list before setting up Fault Tolerance. You can also use the VMware SiteSurvey utility (download at http://www.vmware.com/download/shared_utilities.html) to better understand the configuration issues associated with the cluster, host, and virtual machines being used for vSphere FT.

Cluster Requirements for Fault Tolerance

You must meet the following cluster requirements before you use Fault Tolerance.

- Host certificate checking enabled. See “Enable Host Certificate Checking,” on page 43.

- At least two FT-certified hosts running the same Fault Tolerance version or host build number. The Fault Tolerance version number appears on a host’s Summary tab in the vSphere Client.

NOTE For legacy hosts prior to ESX/ESXi 4.1, this tab lists the host build number instead. Patches can cause host build numbers to vary between ESX and ESXi installations. To ensure that your legacy hosts are FT compatible, do not mix legacy ESX and ESXi hosts in an FT pair. - ESXi hosts have access to the same virtual machine datastores and networks. See “Best Practices for Fault Tolerance,” on page 49.

- Fault Tolerance logging and VMotion networking configured. See “Configure Networking for Host Machines,” on page 43.

- vSphere HA cluster created and enabled. See “Creating a vSphere HA Cluster,” on page 25. vSphere HA must be enabled before you can power on fault tolerant virtual machines or add a host to a cluster that already supports fault tolerant virtual machines.

Host Requirements for Fault Tolerance

You must meet the following host requirements before you use Fault Tolerance.

- Hosts must have processors from the FT-compatible processor group. It is also highly recommended that the hosts’ processors are compatible with one another. See the VMware knowledge base article at http://kb.vmware.com/kb/1008027 for information on supported processors.

- Hosts must be licensed for Fault Tolerance.

- Hosts must be certified for Fault Tolerance.

See http://www.vmware.com/resources/compatibility/search.php and select Search by Fault Tolerant Compatible Sets to determine if your hosts are certified. - The configuration for each host must have Hardware Virtualization (HV) enabled in the BIOS.

To confirm the compatibility of the hosts in the cluster to support Fault Tolerance, you can also run profile compliance checks as described in “Create vSphere HA Cluster and Check Compliance,” on page 45.

NOTE When a host is unable to support Fault Tolerance you can view the reasons for this on the host’s Summary tab in the vSphere Client. Click the blue caption icon next to theHost Configured for FT field to see a list of Fault Tolerance requirements that the host does not meet.

Virtual Machine Requirements for Fault Tolerance

You must meet the following virtual machine requirements before you use Fault Tolerance.

- No unsupported devices attached to the virtual machine. See “Fault Tolerance Interoperability,” on page 41.

- Virtual machines must be stored in virtual RDM or virtual machine disk (VMDK) files that are thick provisioned. If a virtual machine is stored in a VMDK file that is thin provisioned and an attempt is made to enable Fault Tolerance, a message appears indicating that the VMDK file must be converted. To perform the conversion, you must power off the virtual machine.

- Incompatible features must not be running with the fault tolerant virtual machines. See “Fault Tolerance Interoperability,” on page 41.

- Virtual machine files must be stored on shared storage. Acceptable shared storage solutions include Fibre Channel, (hardware and software) iSCSI, NFS, and NAS.

- Only virtual machines with a single vCPU are compatible with Fault Tolerance.

- Virtual machines must be running on one of the supported guest operating systems. See the Vmware knowledge base article at http://kb.vmware.com/kb/1008027 for more information.

Fault Tolerance Interoperability

Before configuring vSphere Fault Tolerance, you should be aware of the features and products Fault Tolerance cannot interoperate with.

vSphere Features Not Supported with Fault Tolerance

The following vSphere features are not supported for fault tolerant virtual machines.

- Snapshots. Snapshots must be removed or committed before Fault Tolerance can be enabled on a virtual machine. In addition, it is not possible to take snapshots of virtual machines on which Fault Tolerance is enabled.

- Storage vMotion. You cannot invoke Storage vMotion for virtual machines with Fault Tolerance turned on. To migrate the storage, you should temporarily turn off Fault Tolerance, and perform the storage vMotion action. When this is complete, you can turn Fault Tolerance back on.

- Linked clones. You cannot enable Fault Tolerance on a virtual machine that is a linked clone, nor can you create a linked clone from an FT-enabled virtual machine.

- Virtual Machine Backups. You cannot back up an FT-enabled virtual machine using Storage API for Data Protection, VMware Data Recovery, or similar backup products that require the use of a virtual machine snapshot, as performed by ESXi. To back up a fault tolerant virtual machine in this manner, you must first disable FT, then re-enable FT after performing the backup. Storage array-based snapshots do not affect FT.

Features and Devices Incompatible with Fault Tolerance

For a virtual machine to be compatible with Fault Tolerance, the Virtual Machine must not use the following features or devices.

| Incompatible Feature or Device | Corrective Action |

| Symmetric multiprocessor (SMP) virtual machines. Onlyvirtual machines with a single vCPU are compatible withFault Tolerance. | Reconfigure the virtual machine as a single vCPU. Manyworkloads have good performance configured as a singlevCPU. |

| Physical Raw Disk mapping (RDM). | Reconfigure virtual machines with physical RDM-backedvirtual devices to use virtual RDMs instead. |

| CD-ROM or floppy virtual devices backed by a physical orremote device. | Remove the CD-ROM or floppy virtual device or reconfigurethe backing with an ISO installed on shared storage. |

| Paravirtualized guests. | If paravirtualization is not required, reconfigure the virtualmachine without a VMI ROM. |

| USB and sound devices. | Remove these devices from the virtual machine. |

| N_Port ID Virtualization (NPIV). | Disable the NPIV configuration of the virtual machine. |

| NIC passthrough. | This feature is not supported by Fault Tolerance so it mustbe turned off. |

| vlance networking drivers. | Fault Tolerance does not support virtual machines that areconfigured with vlance virtual NIC cards. However,vmxnet2, vmxnet3, and e1000 are fully supported. |

| Virtual disks backed with thin-provisioned storage or thickprovisioneddisks that do not have clustering featuresenabled. | When you turn on Fault Tolerance, the conversion to theappropriate disk format is performed by default. You mustpower off the virtual machine to trigger this conversion. |

| Hot-plugging devices. | The hot plug feature is automatically disabled for faulttolerant virtual machines. To hot plug devices (either addingor removing), you must momentarily turn off FaultTolerance, perform the hot plug, and then turn on FaultTolerance.NOTE When using Fault Tolerance, changing the settings of

a virtual network card while a virtual machine is running is a hot-plug operation, since it requires “unplugging” the network card and then “plugging” it in again. For example, with a virtual network card for a running virtual machine, if you change the network that the virtual NIC is connected to, FT must be turned off first. |

| Extended Page Tables/Rapid Virtualization Indexing(EPT/RVI). | EPT/RVI is automatically disabled for virtual machines withFault Tolerance turned on. |

| Serial or parallel ports | Remove these devices from the virtual machine. |

| IPv6 | Use IPv4 addresses with the FT logging NIC. |

| Video devices that have 3D enabled. | Fault Tolerance does not support video devices that have 3Denabled. |

Use VMware best practices to prepare a vSphere environment for FT

Official Documentation:

vSphere Availability Guide, Chapter 3,” Providing Fault Tolerance for Virtual Machines”, section “Best practices”page 49.

Performances Best Practices for VMware vSphere 5.0, Chapter 4 has a section on FT.

VMware Fault Tolerance Recommendations and Considerations on VMware vSphere 4

Best Practices for Fault Tolerance

To ensure optimal Fault Tolerance results, VMware recommends that you follow certain best practices.

In addition to the following information, see the white paper VMware Fault Tolerance Recommendations and Considerations at http://www.vmware.com/resources/techresources/10040.

Host Configuration

Consider the following best practices when configuring your hosts.

- Hosts running the Primary and Secondary VMs should operate at approximately the same processor frequencies, otherwise the Secondary VM might be restarted more frequently. Platform power management features that do not adjust based on workload (for example, power capping and enforced low frequency modes to save power) can cause processor frequencies to vary greatly. If Secondary VMs are being restarted on a regular basis, disable all power management modes on the hosts running fault tolerant virtual machines or ensure that all hosts are running in the same power management modes.

- Apply the same instruction set extension configuration (enabled or disabled) to all hosts. The process for enabling or disabling instruction sets varies among BIOSes. See the documentation for your hosts’ BIOSes about how to configure instruction sets.

Homogeneous Clusters

vSphere Fault Tolerance can function in clusters with nonuniform hosts, but it works best in clusters with compatible nodes. When constructing your cluster, all hosts should have the following configuration:

- Processors from the same compatible processor group.

- Common access to datastores used by the virtual machines.

- The same virtual machine network configuration.

- The same ESXi version.

- The same Fault Tolerance version number (or host build number for hosts prior to ESX/ESXi 4.1).

- The same BIOS settings (power management and hyperthreading) for all hosts.

Run Check Compliance to identify incompatibilities and to correct them.

Performance

To increase the bandwidth available for the logging traffic between Primary and Secondary VMs use a 10Gbit NIC, and enable the use of jumbo frames.

Store ISOs on Shared Storage for Continuous Access

Store ISOs that are accessed by virtual machines with Fault Tolerance enabled on shared storage that is accessible to both instances of the fault tolerant virtual machine. If you use this configuration, the CD-ROM in the virtual machine continues operating normally, even when a failover occurs.

For virtual machines with Fault Tolerance enabled, you might use ISO images that are accessible only to the Primary VM. In such a case, the Primary VM can access the ISO, but if a failover occurs, the CD-ROM reports errors as if there is no media. This situation might be acceptable if the CD-ROM is being used for a temporary, noncritical operation such as an installation.

Avoid Network Partitions

A network partition occurs when a vSphere HA cluster has a management network failure that isolates some of the hosts from vCenter Server and from one another. See “Network Partitions,” on page 15. When a partition occurs, Fault Tolerance protection might be degraded.

In a partitioned vSphere HA cluster using Fault Tolerance, the Primary VM (or its Secondary VM) could end up in a partition managed by a master host that is not responsible for the virtual machine. When a failover is needed, a Secondary VM is restarted only if the Primary VM was in a partition managed by the master host responsible for it.

To ensure that your management network is less likely to have a failure that leads to a network partition, follow the recommendations in “Best Practices for Networking,” on page 34.

vSphere Fault Tolerance Configuration Recommendations

VMware recommends that you observe certain guidelines when configuring Fault Tolerance.

- In addition to non-fault tolerant virtual machines, you should have no more than four fault tolerant virtual machines (primaries or secondaries) on any single host. The number of fault tolerant virtual machines that you can safely run on each host is based on the sizes and workloads of the ESXi host and virtual machines, all of which can vary.

- If you are using NFS to access shared storage, use dedicated NAS hardware with at least a 1Gbit NIC to obtain the network performance required for Fault Tolerance to work properly.

- Ensure that a resource pool containing fault tolerant virtual machines has excess memory above the memory size of the virtual machines. The memory reservation of a fault tolerant virtual machine is set to the virtual machine’s memory size when Fault Tolerance is turned on. Without this excess in the resource pool, there might not be any memory available to use as overhead memory.

- Use a maximum of 16 virtual disks per fault tolerant virtual machine.

- To ensure redundancy and maximum Fault Tolerance protection, you should have a minimum of three hosts in the cluster. In a failover situation, this provides a host that can accommodate the new Secondary VM that is created.

Configure FT logging

Official Documentation:

vSphere Availability Guide, Chapter 3,” Providing Fault Tolerance for Virtual Machines”, page 41.

For each ESXi host supporting FT, VMware recommends a minimum of two physical gigabit NICs. one NIC dedicated to Fault Tolerance logging and one dedicated to vMotion traffic.

NOTE: The vMotion and FT logging NICs must be on different subnets and IPv6 is not supported on the FT logging NIC.

A redundant configuration is highly recommended. This chapter presents a configuration example using 4 physical NICs.

Prepare the infrastructure for FT compliance

Official Documentation:

vSphere Availability Guide, Chapter 3,” Providing Fault Tolerance for Virtual Machines”, page 37.

See previous objectives.

Test FT failover, secondary restart, and application fault tolerance in a FT Virtual Machine

Official Documentation:

vSphere Availability Guide, Chapter 3,” Providing Fault Tolerance for Virtual Machines”, page 37.

VMware KB1020058, Testing a VMware Fault Tolerance configuration.

VMware Fault Tolerance provides continuous availability to virtual machines by keeping a secondary protected virtual machine up and running and in sync in case a complete ESX host failure occurs in the environment.

However, some ESX host component failures may not cause complete server failure. In these cases, Fault Tolerance may appear to behave inconsistently.

Note: VMware recommends that you configure the Fault Tolerance logging NIC to use its own dedicated 1GB+ NIC.

Fault Tolerance failure scenarios

Currently, Fault Tolerance failures are only triggered when there is no communication between the primary and secondary virtual machines.

These three scenarios may occur:

-

A deterministic scenario, where you can predict how a failover will occur

These events are deterministic:- An ESX host failure which causes complete host failure

- The primary virtual machine process fails (or is non-responsive) on the ESX host

- A Fault Tolerance test is initiated from vCenter Server

-

A reactionary scenario, where a failover may occur but you do not know the expected outcome ahead of time

These events are reactionary:- Fault Tolerance logging NIC communication is interrupted or fails

- Fault Tolerance logging NIC communication is very slow

Reactionary events are not predictable because there is a race between the primary and secondary virtual machines to see which will go live. The virtual machine that wins the race stays alive and the other is terminated. The race prevents a split brain scenario that can cause data corruption. In these cases you may see inconsistent results depending on the host that wins the ownership of the virtual machine.

-

A no action taken scenario, where no failover occurs because Fault Tolerance does not monitor for this type of event.

Fault Tolerance does not currently detect or respond to events which are not directly involved with its operation. No action is taken for these events:◦Management network interruption or failure- Virtual machine network interruption or failure

- HBA failures that do not affect the entire host

- Any combination of the above

Testing Fault Tolerance

To test VMware Fault Tolerance properly, communication between the primary and secondary virtual machines must fail. VMware provides a Test Failover function from the virtual machine, which is the best option for testing VMware Fault Tolerance failover. If you want to perform manual failover tests, only deterministic events produce reliable results. Reactionary or no action taken scenarios can produce unexpected results.

These are proper testing scenarios with their expected outcomes:

Note: These tests assume two hosts, Host A and Host B, with the primary fault tolerant virtual machine running on Host A, and the secondary virtual machine running on Host B.

- Select the Test Failover Function from the Fault Tolerance menu on the virtual machine.

This tests the Fault Tolerance functionally in a fully-supported and non-invasive way. In this scenario, the virtual machine fails over from Host A to Host B, and a secondary virtual machine is started back up again. VMware HA failure does not occur in this case. - Host A complete failover

This scenario can be accomplished by pulling the host power cable, rebooting the host, or powering off the host from a remote KVM (such as iLO, DRAC, or RSA). The secondary virtual machine on Host B takes over immediately and continues to process information for the virtual machine. VMware HA failover occurs. - Virtual machine process on Host A fails

This scenario can be accomplished by terminating the active process for the virtual machine by logging into Host A. The secondary virtual machine takes over and no VMware HA failure occurs. VMware does not recommend testing in this way. For more information on terminating a virtual machine, see Powering off an unresponsive virtual machine on an ESX host (1004340).

Other exam notes

- The Saffageek VCAP5-DCA Objectives http://thesaffageek.co.uk/vcap5-dca-objectives/

- Paul Grevink The VCAP5-DCA diaries http://paulgrevink.wordpress.com/the-vcap5-dca-diaries/

- Edward Grigson VCAP5-DCA notes http://www.vexperienced.co.uk/vcap5-dca/

- Jason Langer VCAP5-DCA notes http://www.virtuallanger.com/vcap-dca-5/

- The Foglite VCAP5-DCA notes http://thefoglite.com/vcap-dca5-objective/

Books

- Duncan Epping and Frank Denneman, VMware vSphere 5.0 Clustering Technical Deepdive.

- Duncan Epping and Frank Denneman, VMware vSphere 5.1 Clustering Deepdive.

VMware vSphere official documentation

| VMware vSphere Basics Guide | html | epub | mobi | |

| vSphere Installation and Setup Guide | html | epub | mobi | |

| vSphere Upgrade Guide | html | epub | mobi | |

| vCenter Server and Host Management Guide | html | epub | mobi | |

| vSphere Virtual Machine Administration Guide | html | epub | mobi | |

| vSphere Host Profiles Guide | html | epub | mobi | |

| vSphere Networking Guide | html | epub | mobi | |

| vSphere Storage Guide | html | epub | mobi | |

| vSphere Security Guide | html | epub | mobi | |

| vSphere Resource Management Guide | html | epub | mobi | |

| vSphere Availability Guide | html | epub | mobi | |

| vSphere Monitoring and Performance Guide | html | epub | mobi | |

| vSphere Troubleshooting | html | epub | mobi | |

| VMware vSphere Examples and Scenarios Guide | html | epub | mobi |

Disclaimer.

The information in this article is provided “AS IS” with no warranties, and confers no rights. This article does not represent the thoughts, intentions, plans or strategies of my employer. It is solely my opinion.