Knowledge

- Explain the Pluggable Storage Architecture (PSA) layout

Skills and Abilities

- Install and Configure PSA plug-ins

- Understand different multipathing policy functionalities

- Perform command line configuration of multipathing options

- Change a multipath policy

- Configure Software iSCSI port binding

Tools

- vSphere Command-Line Interface Installation and Scripting Guide

- ESX Configuration Guide

- ESXi Configuration Guide

- Fibre Channel SAN Configuration Guide

- iSCSI SAN Configuration Guide

- Product Documentation

- vSphere Client

- vSphere CLI

- esxcli

Notes

Explain the Pluggable Storage Architecture (PSA) layout

What is PSA. See VMware KB1011375 What is Pluggable Storage Architecture (PSA) and Native Multipathing (NMP)?

Pluggable Storage Architecture (PSA)

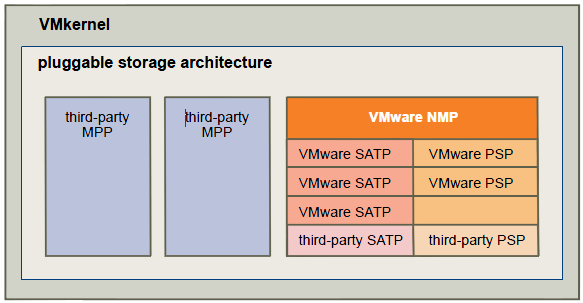

To manage storage multipathing, ESX/ESXi uses a special VMkernel layer, Pluggable Storage Architecture (PSA). The PSA is an open modular framework that coordinates the simultaneous operation of multiple multipathing plugins (MPPs). PSA is a collection of VMkernel APIs that allow third party hardware vendors to insert code directly into the ESX storage I/O path. This allows 3rd party software developers to design their own load balancing techniques and failover mechanisms for particular storage array. The PSA coordinates the operation of the NMP and any additional 3rd party MPP.

Native Multipathing Plugin (NMP)

The VMkernel multipathing plugin that ESX/ESXi provides, by default, is the VMware Native Multipathing Plugin (NMP). The NMP is an extensible module that manages subplugins. There are two types of NMP subplugins: Storage Array Type Plugins (SATPs), and Path Selection Plugins (PSPs). SATPs and PSPs can be built-in and provided by VMware, or can be provided by a third party.

If more multipathing functionality is required, a third party can also provide an MPP to run in addition to, or as a replacement for, the default NMP.

VMware provides a generic Multipathing Plugin (MPP) called Native Multipathing Plugin (NMP).

What does NMP do?

- Manages physical path claiming and unclaiming.

- Registers and de-registers logical devices.

- Associates physical paths with logical devices.

- Processes I/O requests to logical devices:

- Selects an optimal physical path for the request (load balance)

- Performs actions necessary to handle failures and request retries.

- Supports management tasks such as abort or reset of logical devices.

More information about PSA can be found in VMware document: Fibre Channel SAN Configuration Guide

To manage storage multipathing, ESX/ESXi uses a special VMkernel layer, the Pluggable Storage Architecture

(PSA). The PSA is an open, modular framework that coordinates the simultaneous operation of multiple

multipathing plug-ins (MPPs).

The VMkernel multipathing plug-in that ESX/ESXi provides by default is the VMware Native Multipathing

Plug-In (NMP). The NMP is an extensible module that manages sub plug-ins. There are two types of NMP sub

plug-ins, Storage Array Type Plug-Ins (SATPs), and Path Selection Plug-Ins (PSPs). SATPs and PSPs can be

built-in and provided by VMware, or can be provided by a third party.

If more multipathing functionality is required, a third party can also provide an MPP to run in addition to, or

as a replacement for, the default NMP.

When coordinating the VMware NMP and any installed third-party MPPs, the PSA performs the following

tasks:

- Loads and unloads multipathing plug-ins.

- Hides virtual machine specifics from a particular plug-in.

- Routes I/O requests for a specific logical device to the MPP managing that device.

- Handles I/O queuing to the logical devices.

- Implements logical device bandwidth sharing between virtual machines.

- Handles I/O queueing to the physical storage HBAs.

- Handles physical path discovery and removal.

- Provides logical device and physical path I/O statistics.

As Figure illustrates, multiple third-party MPPs can run in parallel with the VMware NMP. When installed,

the third-party MPPs replace the behavior of the NMP and take complete control of the path failover and the

load-balancing operations for specified storage devices.

Figure. Pluggable Storage Architecture

The multipathing modules perform the following operations:

- Manage physical path claiming and unclaiming.

- Manage creation, registration, and deregistration of logical devices.

- Associate physical paths with logical devices.

- Support path failure detection and remediation.

- Process I/O requests to logical devices:

- Select an optimal physical path for the request.

- Depending on a storage device, perform specific actions necessary to handle path failures and I/O command retries.

- Support management tasks, such as abort or reset of logical devices.

VMware Multipathing Module

By default, ESX/ESXi provides an extensible multipathing module called the Native Multipathing Plug-In

(NMP).

Generally, the VMware NMP supports all storage arrays listed on the VMware storage HCL and provides a

default path selection algorithm based on the array type. The NMP associates a set of physical paths with a

specific storage device, or LUN. The specific details of handling path failover for a given storage array are

delegated to a Storage Array Type Plug-In (SATP). The specific details for determining which physical path is

used to issue an I/O request to a storage device are handled by a Path Selection Plug-In (PSP). SATPs and PSPs

are sub plug-ins within the NMP module.

Upon installation of ESX/ESXi, the appropriate SATP for an array you use will be installed automatically. You

do not need to obtain or download any SATPs.

VMware SATPs

Storage Array Type Plug-Ins (SATPs) run in conjunction with the VMware NMP and are responsible for arrayspecific operations.

ESX/ESXi offers a SATP for every type of array that VMware supports. It also provides default SATPs that

support non-specific active-active and ALUA storage arrays, and the local SATP for direct-attached devices.

Each SATP accommodates special characteristics of a certain class of storage arrays and can perform the arrayspecific operations required to detect path state and to activate an inactive path. As a result, the NMP module itself can work with multiple storage arrays without having to be aware of the storage device specifics.

After the NMP determines which SATP to use for a specific storage device and associates the SATP with the

physical paths for that storage device, the SATP implements the tasks that include the following:

- Monitors the health of each physical path.

- Reports changes in the state of each physical path.

- Performs array-specific actions necessary for storage fail-over. For example, for active-passive devices, it can activate passive paths.

VMware PSPs

Path Selection Plug-Ins (PSPs) run with the VMware NMP and are responsible for choosing a physical path

for I/O requests.

The VMware NMP assigns a default PSP for each logical device based on the SATP associated with the physical

paths for that device. You can override the default PSP.

By default, the VMware NMP supports the following PSPs:

| Most Recently Used (VMW_PSP_MRU) | Selects the path the ESX/ESXi host used most recently to access the given device.If this path becomes unavailable, the host switches to an alternative path andcontinues to use the new path while it is available. MRU is the default path

policy for active-passive arrays. |

| Fixed (VMW_PSP_FIXED) | Uses the designated preferred path, if it has been configured. Otherwise, it usesthe first working path discovered at system boot time. If the host cannot usethe preferred path, it selects a random alternative available path. The host

reverts back to the preferred path as soon as that path becomes available. Fixed is the default path policy for active-active arrays. CAUTION If used with active-passive arrays, the Fixed path policy might cause path thrashing. |

| VMW_PSP_FIXED_AP | Extends the Fixed functionality to active-passive and ALUA mode arrays. |

| Round Robin (VMW_PSP_RR) | Uses a path selection algorithm that rotates through all available active pathsenabling load balancing across the paths. |

VMware NMP Flow of I/O

When a virtual machine issues an I/O request to a storage device managed by the NMP, the following process

takes place.

- The NMP calls the PSP assigned to this storage device.

- The PSP selects an appropriate physical path on which to issue the I/O.

- The NMP issues the I/O request on the path selected by the PSP.

- If the I/O operation is successful, the NMP reports its completion.

- If the I/O operation reports an error, the NMP calls the appropriate SATP.

- The SATP interprets the I/O command errors and, when appropriate, activates the inactive paths.

- The PSP is called to select a new path on which to issue the I/O.

On the GeekSilverBlog there is a good article about PSA and NMP, see: http://geeksilver.wordpress.com/2010/08/17/vmware-vsphere-4-1-psa-pluggable-storage-architecture-understanding/

Duncan Epping has written an article on his Yellow-Bricks website. http://www.yellow-bricks.com/2009/03/19/pluggable-storage-architecture-exploring-the-next-version-of-esxvcenter/

Install and Configure PSA plug-ins

The info is already discussed in the other objects. I found an article with movie on the Blog site of Eric Sloof. This article is called: StarWind iSCSI multi pathing with Round Robin and esxcli.

Another example can be found at the NTG Consult Weblog and is called: vSphere4 ESX4: How to configure iSCSI Software initiator on ESX4 against a HP MSA iSCSI Storage system

Understand different multipathing policy functionalities

vStorage Multi Paths Options in vSphere See: http://blogs.vmware.com/storage/2009/10/vstorage-multi-paths-options-in-vsphere.html Isn’t available at this time, recovered from the Google Cache.

Multi Path challenges

In most SAN deployments it is considered best practice to have redundant connections configured between the storage and the server. This often includes redundant host based adaptors, Fibre Channel switches, and controller ports to the storage array. This results in having four or more separate paths connecting the server to the same storage target. These multiple paths offers protection against a single point of failure and permit load balancing.

In a VMware virtualization environment a single datastore can have active IO on only a single path to the underling storage target (LUN or NFS mountpoint) at a given time. The storage stack in an ESX server can be configured to use any of the available paths but only one at a time prior to vSphere. In the event of the active path failing, the ESX server will detect the failure and failover to an alternate path.

The two challenges that VMware Native Multi Path addresses has been the 1) presentation of a single path when several are available (aggregation) and 2) handling of failover and failback when the active path is compromised (Failover). In addition the ability to alternate which path is active was supported through the introduction of round robin path selection in ESX release 3.5. Through the command line one can change the default parameters to have the path switched after a certain number of blocks or a set number of transactions going across an active path. These values are not currently available in the vCenter interface and can only be adjusted via the command line

Another issue which the ESX server storage stack has to address was how to treat a given storage device as some offered and active/active (A/A) controllers and others had active/passive controllers. A given LUN can be addressed by more than one storage controller at the same time in an active/active array. Where as the active/passive (A/P) array may access a LUN from one controller at a time and requires the transfer control to the other controller when access thru that controller is needed. To deal with this difference in array capabilities, VMware had to place logic in the code for each type of storage array supported. That logic defined what capabilities the array had with regards to active/active or active/passive as well as other attributes.

Path selection and failback options included: Fixed, Most Recently Used and Round Robin. The Fixed path policy is recommended for A/A arrays. It allows the use to configure static load balancing of paths across ESX hosts. The MRU policy is recommended for A/P arrays. It prevents the possibility of path thrashing due to a partitioned SAN environment. Round robin may be configured for either A/A or A/P arrays, but care must be taken to insure that A/P arrays are not configured to automatically switch controllers. If A/P arrays are configured to automatically switch controllers (sometimes called pseudo A/A), performance may be degraded due to path ping ponging.

vStorage API for MultiPathing

Pluggable storage architecture (PSA) was introduced in vSphere 4.0 to improve code modularisation, code encapsulation, and to enable 3rd party storage vendors multipath software to be leveraged in the ESX server. It provides support for the storage devices on the existing ESX storage HCL through the use of Storage Array Type Plugins (SATP) for the new Native Multipathing Plugins (NMP) module. It also introduces an API for 3rd party storage vendors to build and certify multipath modules to be plugged into the VMware storage stack.

In addition to the VMware NMP, 3rd party storage vendors can create there own pluggable storage modules to either replace the SATP that VMware offered. Or they could write a Path Selection Module (PSP) or MultiPath Plug (MPP) in that could leverage their own storage array specific intelligence for increased performance and availability.

As with earlier versions of ESX server, the VI admin can set certain path selection preferences via the vCenter path selection preferences. The path selection options of Fixed, MRU and Round Robin still exist as options in vSphere. A default value, set by the SATP that matched the storage array could be changed even if not advised as best practice. But with the new 3rd party SATP the vendor could set what defaults were optimized for their array. Through this interface, ALUA became an option that could be supported.

The Vmware Native MultiPath module has sub-plugins for failover and load balancing.

- Storage Array Type Plug-in (SATP) to handle failover and the

- Path Selection Plug-in (PSP) to handle load-balancing.

- NMP “associates” a SATP with the set of paths from a given type of array.

- NMP “associates” a PSP with a logical device.

- NMP specifies a default PSP for every logical device based on the SATP associated with the physical paths for that device.

- NMP allows the default PSP for a device to be overridden.

Rules can be associated with NMP as well as 3rd party provided MPP modules. Those rules stored in the /etc/vmware/esx.conf file.

Rules govern the operation of both the NMP and the MPP modules.

In ESX 4.0 rules are configurable only thru the CLI and not through vCenter.

To see the list of rules defined use the following command:

# esxcli corestorage claimrule list

To see the list of rules associated with a certain STAP use:

# esxcli nmp satp listrules -s <specific SATP>

The primary functions of an SATP are to:

- Implements the switching of physical paths to the array when a path has failed.

- Determines when a hardware component of a physical path has failed.

- Monitors the hardware state of the physical paths to the storage array.

VMware provides a default SATP for each supported array as well as a generic SATP (an active/active version and an active/passive version) for non-specified storage arrays.

To see the complete list of defined Storage Array Type Plugins (SATP) for the VMware Native Multipath Plugin (NMP), use the following commands:

# esxcli nmp satp list

Name Default PSP Description

VMW_SATP_ALUA_CX VMW_PSP_FIXED Supports EMC CX that use the ALUA protocol

VMW_SATP_SVC VMW_PSP_FIXED Supports IBM SVC

VMW_SATP_MSA VMW_PSP_MRU Supports HP MSA

VMW_SATP_EQL VMW_PSP_FIXED Supports EqualLogic arrays

VMW_SATP_INV VMW_PSP_FIXED Supports EMC Invista

VMW_SATP_SYMM VMW_PSP_FIXED Supports EMC Symmetrix

VMW_SATP_LSI VMW_PSP_MRU Supports LSI and other arrays compatible with the SIS 6.10 in non-AVT mode

VMW_SATP_EVA VMW_PSP_FIXED Supports HP EVA

VMW_SATP_DEFAULT_AP VMW_PSP_MRU Supports non-specific active/passive arrays

VMW_SATP_CX VMW_PSP_MRU Supports EMC CX that do not use the ALUA protocol

VMW_SATP_ALUA VMW_PSP_MRU Supports non-specific arrays that use the ALUA protocol

VMW_SATP_DEFAULT_AA VMW_PSP_FIXED Supports non-specific active/active arrays

VMW_SATP_LOCAL VMW_PSP_FIXED Supports direct attached devices

To take advantage of certain storage specific characteristics of an array, one can install a 3rd party SATP provided by the vendor of the storage array, or by a software company specializing in optimizing the use of your storage array.

Path Selection Plug-in (PSP).

The primary function of the PSP module is to determine which physical path is to be used for an I/O request to a storage device. The PSP is a sub-plug-in to the NMP and handles load balancing. Vmware offers three PSPs:

To see a complete list of Path Selection Plugins (PSP) use the following command:

# esxcli nmp psp list

Name Description

VMW_PSP_MRU Most Recently Used Path Selection

VMW_PSP_RR Round Robin Path Selection

VMW_PSP_FIXED Fixed Path Selection

Fixed — Uses the designated preferred path, if it has been configured. Otherwise, it uses the first working path discovered at system boot time. If the ESX host cannot use the preferred path, it selects a random alternative available path. The ESX host automatically reverts back to the preferred path as soon as the path becomes available.

Most Recently Used (MRU) — Uses the first working path discovered at system boot time. If this path becomes unavailable, the ESX host switches to an alternative path and continues to use the new path while it is available.

Round Robin (RR) – Uses an automatic path selection rotating through all available paths and enabling load balancing across the paths. It only uses active paths and is of most use on Active/Active arrays. However in Active/Passive arrays, it will load balance between on ports to the same Storage Processor. However, Round Robin policy is not supported in MSCS environments.

3rd party vendors can provide their own PSP to take advantage of more complex I/O load balancing algorithms that their storage array might offer. However, 3rd party storage vendors are not required to create plug-ins. All the storage array specific code supported in ESX release 3.x has been ported to ESX release 4 as a SATP plug-in.

MultiPath Plugins (MPP) or Multipath Enhancement Module (MEM)

Multipah Plugin (also referred to as Multipath Enhancement Modules (MEM)) enable storage partners to leverage intelligence within their array to present one connection that is backed by an aggregation of several connections. Using the intelligence in the array with their own vendor module in the ESX server, these modules can increase the performance with load balancing and increase availability of the multiple connections. These modules offer the most resilient and highly available multipathing for VMware ESX servers by providing coordinated path management between both the ESX server and the storage.

A MPP “claims” a physical path and “manages” or “exports” a logical device. The MPP is the only code that can associate a physical path with a logical device. So that path can not be managed by both a NMP and MPP at the same time.

The PSA introduces support for two more features of the SCSI 3 (SPC-3) protocol: TPGS and ALUA.

However, although SCSI-3 is supported for some VMFS functions (LSI emulation for MSCS environments) the volume manager functions within VMFS are still SCSI-2 compliant. As such, the 2TB per LUN limit still applies.

Target Port Group Support (TPGS) – Is a mechanism that gives storage devices the ability to specify path performance, and other characteristics to a host, like an ESX server. A Target Port Group (TPG) is defined as a set of target ports that are in the same target port Asymmetric Access State (AAS) at all times with respect to a given LUN.

Hosts can use AAS of a TPG to prioritize paths and make failover and load balancing decisions.

Control and management of ALUA AASs can operate in 1 of 4 defined modes:

- Not Supported (Report and Set TPGs commands invalid).

- Implicit (TPG AASs are set and managed by the array only, and reported with the Report TPGs command)

- Explicit (TPG AASs are set and managed by the host only with the Set TPGs command, and reported with the Report TPGs command)

- Both (TPG AASs can be set and managed by either the array or the host)

Asymmetric Logical Unit Access (ALUA) is a standard-based method for discovering and managing multiple paths to a SCSI LUN. It is described in the T10 SCSI-3 specification SPC-3, section 5.8. It provides a standard way to allow devices to report the states of their respective target ports to hosts. Hosts can prioritize paths and make failover/load balancing decisions.

Since target ports could be on different physical units, ALUA allows different levels of access for target ports to each LUN. ALUA will route I/O to a particular port to achieve best performance.

ALUA Follow-over Feature:

A Follow-over scheme implemented to minimize path thrashing in cluster environments with shared LUNs. Follow-over supported for explicit ALUA only and is not part of the ALUA standard.

When an ESX host detects a TPG AAS change that it did not cause:

- It will not try to revert this change even if it only has access to non-optimized paths

- Thus, it follows the TPG of the array

For more information see two blogposts at VirtualAusterity.

- http://virtualausterity.blogspot.com/2010/01/why-vmware-psa-is-helping-me-to-save.html

- http://virtualausterity.blogspot.com/2010/01/why-vmware-psa-is-helping-me-to-save_13.html

Perform command line configuration of multipathing options

See VMware KB 1003973. Obtaining LUN pathing information for ESX hosts

To obtain LUN multipathing information from the ESX host command line:

1.Log in to the ESX host console.

2.Type esxcfg-mpath -l and press Enter.

The output appears similar to:

Runtime Name: vmhba1:C0:T0:L0

Device: naa.6006016095101200d2ca9f57c8c2de11

Device Display Name: DGC Fibre Channel Disk (naa.6006016095101200d2ca9f57c8c2de11)

Adapter: vmhba1 Channel: 0 Target: 0 LUN: 0

Adapter Identifier: fc.2000001b32865b73:2100001b32865b73

Target Identifier: fc.50060160b020f2d9:500601603020f2d9

Plugin: NMP

State: active

Transport: fc

Adapter Transport Details: WWNN: 20:00:00:1b:32:86:5b:73 WWPN: 21:00:00:1b:32:86:5b:73

Target Transport Details: WWNN: 50:06:01:60:b0:20:f2:d9 WWPN: 50:06:01:60:30:20:f2:d9

To List all Paths with abbreviated information

# esxcfg-mpath -L

vmhba2:C0:T0:L0 state:active naa.6006016043201700d67a179ab32fdc11 vmhba2 0 0 0 NMP active san fc.2000001b32017d07:2100001b32017d07 fc.50060160b021b9df:500601603021b9df

vmhba35:C0:T0:L0 state:active naa.6000eb36d830c008000000000000001c vmhba35 0 0 0 NMP active san iqn.1998-01.com.vmware:cs-tse-f116-6a88c8f1 00023d000001,iqn.2003-10.com.lefthandnetworks:vi40:28:vol0,t,1

List all Paths with adapter and device mappings.

# esxcfg-mpath -m

vmhba2:C0:T0:L0 vmhba2 fc.2000001b32017d07:2100001b32017d07 fc.50060160b021b9df:500601603021b9df naa.6006016043201700d67a179ab32fdc11

vmhba35:C0:T0:L0 vmhba35 iqn.1998-01.com.vmware:cs-tse-f116-6a88c8f1 00023d000001,iqn.2003-10.com.lefthandnetworks:vi40:28:vol0,t,1 naa.6000eb36d830c008000000000000001c

List all devices with their corresponding paths.

# esxcfg-mpath -b

naa.6000eb36d830c008000000000000001c

vmhba35:C0:T0:L0

naa.6006016043201700f008a62dec36dc11

vmhba2:C0:T1:L2

vmhba2:C0:T0:L2

Configuring multipath settings for your storage in vSphere Client

To configure multipath settings for your storage in vSphere Client:

- Click Storage.

- Select a datastore or mapped LUN.

- Click Properties.

- In the Properties dialog, select the desired extent, if necessary.

- Click Extent Device > Manage Paths and configure the paths in the Manage Path dialog.

Change a multipath policy

Generally, you do not have to change the default multipathing settings your host uses for a specific storage

device. However, if you want to make any changes, you can use the Manage Paths dialog box to modify a path

selection policy and specify the preferred path for the Fixed policy.

Procedure

- Open the Manage Paths dialog box either from the Datastores or Devices view.

- Select a path selection policy. By default, VMware supports the following path selection policies. If you have a third-party PSP installed on your host, its policy also appears on the list.

- Fixed (VMW_PSP_FIXED)

- Fixed AP (VMW_PSP_FIXED_AP)

- Most Recently Used (VMW_PSP_MRU)

- Round Robin (VMW_PSP_RR)

- For the fixed policy, specify the preferred path by right-clicking the path you want to assign as the preferred path, and selecting Preferred.

- Click OK to save your settings and exit the dialog box.

Disable Paths

You can temporarily disable paths for maintenance or other reasons. You can do so using the vSphere Client.

Procedure

- Open the Manage Paths dialog box either from the Datastores or Devices view.

- In the Paths panel, right-click the path to disable, and select Disable.

- Click OK to save your settings and exit the dialog box.

You can also disable a path from the adapter’s Paths view by right-clicking the path in the list and selecting

Disable.

Configure Software iSCSI port binding

See: iSCSI SAN Configuration Guide.

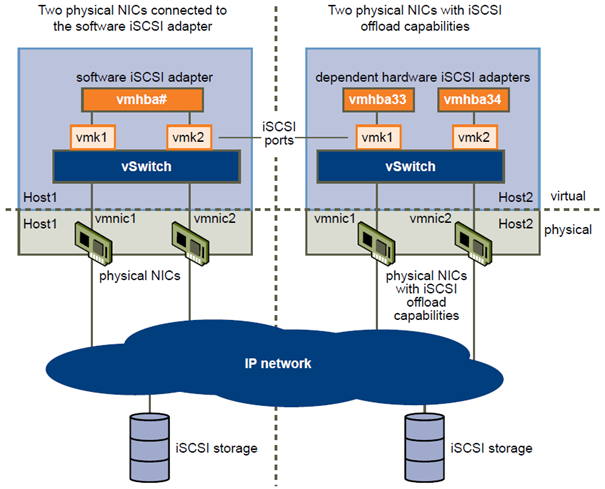

With the software-based iSCSI implementation, you can use standard NICs to connect your host to a remote

iSCSI target on the IP network. The software iSCSI adapter that is built into ESX/ESXi facilitates this connection

by communicating with the physical NICs through the network stack.

When you connect to a vCenter Server or a host with the vSphere Client, you can see the software iSCSI adapter on the list of your storage adapters. Only one software iSCSI adapter appears. Before you can use the software iSCSI adapter, you must set up networking, enable the adapter, and configure parameters such as discovery addresses and CHAP. The software iSCSI adapter configuration workflow includes these steps:

- Configure the iSCSI networking by creating ports for iSCSI traffic.

- Enable the software iSCSI adapter.

- If you use multiple NICs for the software iSCSI multipathing, perform the port binding by connecting all iSCSI ports to the software iSCSI adapter.

- If needed, enable Jumbo Frames. Jumbo Frames must be enabled for each vSwitch through the vSphere CLI.

- Configure discovery addresses.

- Configure CHAP parameters.

Configure the iSCSI networking by creating ports for iSCSI traffic

If you use the software iSCSI adapter or dependent hardware iSCSI adapters, you must set up the networking

for iSCSI before you can enable and configure your iSCSI adapters. Networking configuration for iSCSI

involves opening a VMkernel iSCSI port for the traffic between the iSCSI adapter and the physical NIC.

Depending on the number of physical NICs you use for iSCSI traffic, the networking setup can be different.

- If you have a single physical NIC, create one iSCSI port on a vSwitch connected to the NIC. VMware recommends that you designate a separate network adapter for iSCSI. Do not use iSCSI on 100Mbps or slower adapters.

- If you have two or more physical NICs for iSCSI, create a separate iSCSI port for each physical NIC and use the NICs for iSCSI multipathing.

Create iSCSI Port for a Single NIC

Use this task to connect the VMkernel, which runs services for iSCSI storage, to a physical NIC. If you have just one physical network adapter to be used for iSCSI traffic, this is the only procedure you must perform to set up your iSCSI networking.

Procedure

- Log in to the vSphere Client and select the host from the inventory panel.

- Click the Configuration tab and click Networking.

- In the Virtual Switch view, click Add Networking.

- Select VMkernel and click Next.

- Select Create a virtual switch to create a new vSwitch.

- Select a NIC you want to use for iSCSI traffic.

IMPORTANT If you are creating a port for the dependent hardware iSCSI adapter, make sure to select the NIC that corresponds to the iSCSI component. - Click Next.

- Enter a network label. Network label is a friendly name that identifies the VMkernel port that you are creating, for example, iSCSI.

- Click Next.

- Specify the IP settings and click Next.

- Review the information and click Finish.

Using Multiple NICs for Software and Dependent Hardware iSCSI

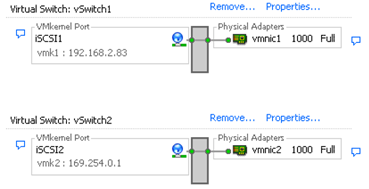

If your host has more than one physical NIC for iSCSI, for each physical NIC, create a separate iSCSI port using

1:1 mapping.

To achieve the 1:1 mapping, designate a separate vSwitch for each network adapter and iSCSI port pair.

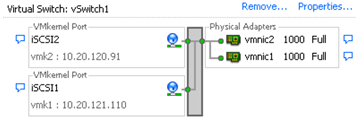

An alternative is to add all NIC and iSCSI port pairs to a single vSwitch. You must override the

default setup and make sure that each port maps to only one corresponding active NIC.

After you map iSCSI ports to network adapters, use the esxcli command to bind the ports to the iSCSI adapters.

With dependent hardware iSCSI adapters, perform port binding, whether you use one NIC or multiple NICs.

Create Additional iSCSI Ports for Multiple NICs

Use this task if you have two or more NICs you can designate for iSCSI and you want to connect all of your iSCSI NICs to a single vSwitch. In this task, you associate VMkernel iSCSI ports with the network adapters using 1:1 mapping.

You now need to connect additional NICs to the existing vSwitch and map them to corresponding iSCSI ports.

NOTE If you use a vNetwork Distributed Switch with multiple dvUplinks, for port binding, create a separate

dvPort group per each physical NIC. Then set the team policy so that each dvPort group has only one active

dvUplink.

For detailed information on vNetwork Distributed Switches, see the Networking section of the ESX/ESXi

Configuration Guide.

Prerequisites

You must create a vSwitch that maps an iSCSI port to a physical NIC designated for iSCSI traffic.

Procedure

- Log in to the vSphere Client and select the host from the inventory panel.

- Click the Configuration tab and click Networking.

- Select the vSwitch that you use for iSCSI and click Properties.

- Connect additional network adapters to the vSwitch.

- In the vSwitch Properties dialog box, click the Network Adapters tab and click Add.

- Select one or more NICs from the list and click Next. With dependent hardware iSCSI adapters, make sure to select only those NICs that have a corresponding iSCSI component.

- Review the information on the Adapter Summary page, and click Finish. The list of network adapters reappears, showing the network adapters that the vSwitch now claims.

- Create iSCSI ports for all NICs that you connected. The number of iSCSI ports must correspond to the number of NICs on the vSwitch.

- In the vSwitch Properties dialog box, click the Ports tab and click Add.

- Select VMkernel and click Next.

- Under Port Group Properties, enter a network label, for example iSCSI, and click Next.

- Specify the IP settings and click Next. When you enter subnet mask, make sure that the NIC is set to the subnet of the storage system it connects to.

- Review the information and click Finish.CAUTION If the NIC you use with your iSCSI adapter, either software or dependent hardware, is not in the same subnet as your iSCSI target, your host is not able to establish sessions from this network adapter to the target.

- Map each iSCSI port to just one active NIC. By default, for each iSCSI port on the vSwitch, all network adapters appear as active. You must override this setup, so that each port maps to only one corresponding active NIC. For example, iSCSI port vmk1 maps to vmnic1, port vmk2 maps to vmnic2, and so on.

- On the Ports tab, select an iSCSI port and click Edit.

- Click the NIC Teaming tab and select Override vSwitch failover order.

- Designate only one adapter as active and move all remaining adapters to the Unused Adapters category.

- Repeat the last step for each iSCSI port on the vSwitch.

What to do next

After performing this task, use the esxcli command to bind the iSCSI ports to the software iSCSI or dependent

hardware iSCSI adapters.

Bind iSCSI Ports to iSCSI Adapters

Bind an iSCSI port that you created for a NIC to an iSCSI adapter. With the software iSCSI adapter, perform this task only if you set up two or more NICs for the iSCSI multipathing. If you use dependent hardware iSCSI adapters, the task is required regardless of whether you have multiple adapters or one adapter.

Procedure

- Identify the name of the iSCSI port assigned to the physical NIC. The vSphere Client displays the port’s name below the network label. In the following graphic, the ports’ names are vmk1 and vmk2.

- Use the vSphere CLI command to bind the iSCSI port to the iSCSI adapter.

esxcli swiscsi nic add -n port_name -d vmhba

IMPORTANT For software iSCSI, repeat this command for each iSCSI port connecting all ports with the software iSCSI adapter. With dependent hardware iSCSI, make sure to bind each port to an appropriate corresponding adapter. - Verify that the port was added to the iSCSI adapter.

esxcli swiscsi nic list -d vmhba - Use the vSphere Client to rescan the iSCSI adapter.

Binding iSCSI Ports to iSCSI Adapters

Review examples about how to bind multiple ports that you created for physical NICs to the software iSCSI

adapter or multiple dependent hardware iSCSI adapters.

Example 1. Connecting iSCSI Ports to the Software iSCSI Adapter

This example shows how to connect the iSCSI ports vmk1 and vmk2 to the software iSCSI adapter vmhba33.

- Connect vmk1 to vmhba33: esxcli swiscsi nic add -n vmk1 -d vmhba33.

- Connect vmk2 to vmhba33: esxcli swiscsi nic add -n vmk2 -d vmhba33.

- Verify vmhba33 configuration: esxcli swiscsi nic list -d vmhba33.

Both vmk1 and vmk2 should be listed.

If you display the Paths view for the vmhba33 adapter through the vSphere Client, you see that the adapter uses two paths to access the same target. The runtime names of the paths are vmhba33:C1:T1:L0 and vmhba33:C2:T1:L0. C1 and C2 in this example indicate the two network adapters that are used for multipathing.

Example 2. Connecting iSCSI Ports to Dependent Hardware iSCSI Adapters

This example shows how to connect the iSCSI ports vmk1 and vmk2 to corresponding hardware iSCSI adapters

vmhba33 and vmhba34.

- Connect vmk1 to vmhba33: esxcli swiscsi nic add -n vmk1 -d vmhba33.

- Connect vmk2 to vmhba34: esxcli swiscsi nic add -n vmk2 -d vmhba34.

- Verify vmhba33 configuration: esxcli swiscsi nic list -d vmhba33.

- Verify vmhba34 configuration: esxcli swiscsi nic list -d vmhba34.

Disconnect iSCSI Ports from iSCSI Adapters

If you need to make changes in the networking configuration that you use for iSCSI traffic, for example, remove a NIC or an iSCSI port, make sure to disconnect the iSCSI port from the iSCSI adapter.

IMPORTANT If active iSCSI sessions exist between your host and targets, you cannot disconnect the iSCSI port.

Procedure

- Use the vSphere CLI to disconnect the iSCSI port from the iSCSI adapter.

esxcli swiscsi nic remove -n port_name -d vmhba - Verify that the port was disconnected from the iSCSI adapter.

esxcli swiscsi nic list -d vmhba - Use the vSphere Client to rescan the iSCSI adapter.

Links

Documents and manuals

vSphere Command-Line Interface Installation and Scripting Guide: http://www.vmware.com/pdf/vsphere4/r41/vsp4_41_vcli_inst_script.pdf

ESX Configuration Guide: http://www.vmware.com/pdf/vsphere4/r41/vsp_41_esx_server_config.pdf

ESXi Configuration Guide: http://www.vmware.com/pdf/vsphere4/r41/vsp_41_esxi_server_config.pdf

Fibre Channel SAN Configuration Guide: http://www.vmware.com/pdf/vsphere4/r41/vsp_41_san_cfg.pdf

iSCSI SAN Configuration Guide: http://www.vmware.com/pdf/vsphere4/r41/vsp_41_iscsi_san_cfg.pdf

Source

- http://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=1011375

- http://www.vmware.com/pdf/vsphere4/r41/vsp_41_san_cfg.pdf

- http://geeksilver.wordpress.com/2010/08/17/vmware-vsphere-4-1-psa-pluggable-storage-architecture-understanding/

- http://www.yellow-bricks.com/2009/03/19/pluggable-storage-architecture-exploring-the-next-version-of-esxvcenter/

- http://www.ntpro.nl/blog/archives/1520-StarWind-iSCSI-multi-pathing-with-Round-Robin-and-esxcli.html

- http://blog.ntgconsult.dk/2010/02/vsphere4-esx4-how-to-configure-iscsi-software-initiator-on-esx4-against-a-hp-msa-iscsi-storage.html

- http://blogs.vmware.com/storage/2009/10/vstorage-multi-paths-options-in-vsphere.html

- http://virtualausterity.blogspot.com/2010/01/why-vmware-psa-is-helping-me-to-save.html

- http://virtualausterity.blogspot.com/2010/01/why-vmware-psa-is-helping-me-to-save_13.html

- http://kb.vmware.com/kb/1003973

- http://www.vmware.com/pdf/vsphere4/r41/vsp_41_iscsi_san_cfg.pdf

If there are things missing or incorrect please let me know.

Disclaimer.

The information in this article is provided “AS IS” with no warranties, and confers no rights. This article does not represent the thoughts, intentions, plans or strategies of my employer. It is solely my opinion.